Chapter 11. Usability Plans

![]()

A document describing the objectives and approach for conducting a usability test.

The usability test plan describes the goals, method, and approach for a usability test. The test plan includes several different components, from profiles of participants to an outline of a discussion with users. The test plan described here incorporates test objectives, test logistics, user profiles, and the script.

Usability testing is an essential part of the web design diet. In a nutshell, it’s a technique for soliciting feedback on the design of a web site. Usability testing is usually conducted on one participant at a time and attempts to have participants use the site in as close to a real-world setting as possible. Design teams have different approaches to usability testing at their disposal but two crucial milestones remain consistent—the plan describing what you will do during the test, and an account of what came out of the test. This chapter describes the test plan—the document you prepare in advance of testing—and the next chapter describes the report.

There are several aspects of a usability test that need planning, and some people give each its own document. Other usability researchers create a single test plan, addressing all aspects of the test in a single document. This chapter will treat usability test plans as single documents but will indicate where you might split the document if you need to have several deliverables.

What Makes a Good Usability Plan?

The basic elements of a usability test answer three questions: What do we want out of this test, how will we conduct the test, and what will we ask users during the test? With those questions answered, you’ve got the barest information necessary to make a test happen.

Tip: How to Write Good Scenarios

Determining exactly what scenarios to test is beyond the scope of this book, but not beyond the scope of The Handbook of Usability Testing, by Jeffrey Rubin and Dana Chisnell.

Establishes Objectives

Objectives for a usability plan answer the question, “What will we get out of this test?” For usability testing, objectives establish boundaries and constraints, allowing us to focus on important areas to inform the design process. Imagine we’re designing a health encyclopedia for a medical insurance company. The objectives for such a test might look like this:

• Determine which version of the disease description page helps users retain the most information about a particular disease.

• Determine which version of the disease description page is the most actionable, giving users a clear idea of what they can do next.

• Determine if the new navigation structure makes it easier for users to locate a particular disease.

Centers on Scenarios

Test scenarios run from the general to the specific. For example, in some cases your scenarios may be as straightforward as “present screen to user and ask for impressions.” In other cases, you may want to ask the participant to imagine himself in a specific situation and use the web site to address the situation: “You have just come down with the flu and you want to see if any of your symptoms put you at high risk.”

Your plan should list, in one place, all the scenarios you’ll test. If necessary, you can elaborate on the scenarios on separate pages. Ultimately, you need to create a tool for the moderator to help him or her facilitate the test.

Directs Moderator

The two main audiences for usability test plans are the project stakeholders and the test facilitator—the person doing most of the orchestration during the test. There may be other players in the test—people taking notes, people managing the facility, people working the recording equipment—or there may be one person who does it all. The facilitator may be someone on your team (i.e., you) or it may be a contractor or freelancer you’ve hired for this purpose. In either case, make the script as specific as possible.

During the test the moderator will be thinking about so many things at the same time—what the participant is doing right then, whether the recording devices are working, what will happen when the user clicks a button or link—so the script needs to guide the moderator, and be a source of comfort and focus whenever he or she looks down at it.

Formatting your document like a script helps the moderator distinguish between “stage directions” and things that he or she needs to say to the participant. Other kinds of useful information are specific questions to ask participants as part of a scenario, what kinds of “gotchas” participants might run into, and where to go next.

Acknowledges Limitations of Test Materials

In the ideal world, every usability test will be run against a fully operational prototype. (Perhaps by the time you’re reading this, that will be true. Things move very quickly around here.) More often than not, we find ourselves soliciting feedback on sketches, hacked-together PDFs, HTML screens devoid of real content, or just one comp.

Can you still get reasonable usability results out of these kinds of tests? As a designer, my philosophy is this: Some feedback is better than no feedback. As a guy on the hook for writing a test plan, I’m concerned with crafting a script that acknowledges where our test materials fall short. The plan should describe the materials used in the test and highlight potential obstacles in specific scenarios. Make sure the script doesn’t ask moderators or participants to do things that the prototype, sketches, or comps can’t do.

Tips for Discussing Usability Plans

A test plan isn’t a hard story to tell, especially if the project team has bought into the idea of usability testing. While other documents in this book lend themselves to different meeting structures, the only decision you’ll need to make for a usability test plan is how much time to spend on each section.

If you have only one meeting to review a test plan, make sure you cover each part—don’t leave anything out—but you don’t need to go into great detail on the logistics and methodology. If your stakeholders aren’t big “detail people,” you can also just hit the high points of the script, describing what scenarios you’re testing and how those correspond to the different functions or areas of the web site.

Scope creep: Losing sight of objectives

When presented with the opportunity to talk to actual end users, some stakeholders and team members are like kids in a candy store. They may start suggesting questions and tasks—reasonable though they might be—that are outside the scope of the test. Some stakeholders may use the posttest questionnaire, for example, to solicit feedback about other areas of their business. When this happens, the stakeholders need a reminder about the purpose of the test.

The flip side of this coin is that the suggested questions and modifications may fall within the scope of your script, but the script has become so long that there’s no way to get through it all in the time allotted. This is a good indication that your objectives are not specific enough—they’re not serving as an effective filter to keep the test focused.

Usability newbies

Inevitably, you’ll be presenting your test plan to a roomful of stakeholders, one or two of whom aren’t familiar with usability testing. They may start questioning the purpose of the exercise, or worse, your methodology. If your discussion is derailed by these kinds of questions, you should whip out your usability testing elevator pitch—the three-sentence description that at once gives an overview of usability while shutting down this line of questioning. Don’t have a usability elevator pitch? This is your reminder to come up with one, or just use this one, free of charge:

Usability testing is a means for us to gather feedback on the design of the system in the context of specific real-world tasks. By asking users to use the system (or a reasonable facsimile) we can observe opportunities to improve the design, catching them at this stage of the design process rather than later when changes would be more costly. Usability testing has been built into the project plan since day one. We need to get through the plan because we have users scheduled to come in next week, but if you want to talk further about usability testing, we can discuss it after the meeting.

Feel free to modify this to suit your needs.

Anatomy of a Plan

The content of the usability plan has to answer at least two questions: What are you doing, and why are you doing it?

Icing on the cake in a usability plan includes describing the methodology and the recruiting process. Providing a detailed script comprises the ice cream on the side. (Either I’m craving refined sugar or usability testing makes me think of birthday cake.)

Organizing Plans

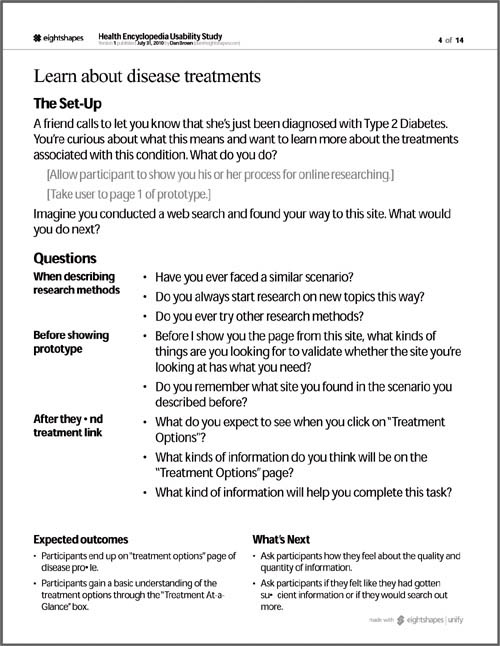

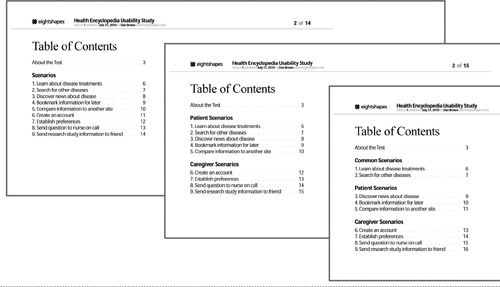

Here’s a good table of contents for a usability plan:

Table 11.1. The usability plan should provide a one-page summary of the whole test so the project team has an at-a-glance understanding of what it is all about. The scenarios chapter may be repeated if the test covers multiple user groups, and each group has a substantially different set of scenarios.

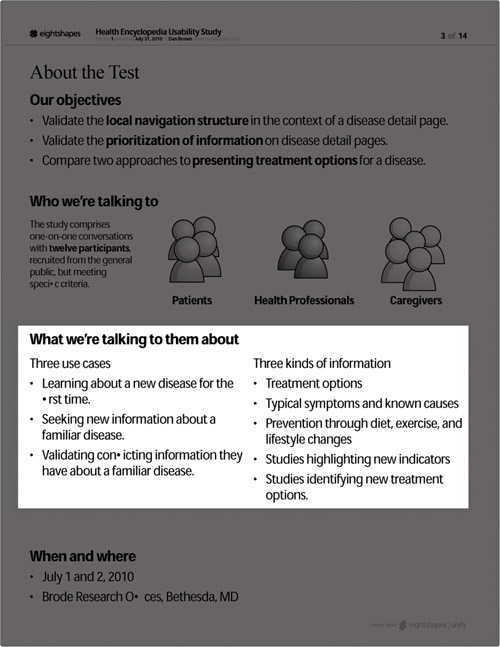

Summarizing the Plan

Describing the usability activities on a single page helps stakeholders and other team members understand the kinds of information you will get out of the test. It sets their expectations about what the test is and what it is not.

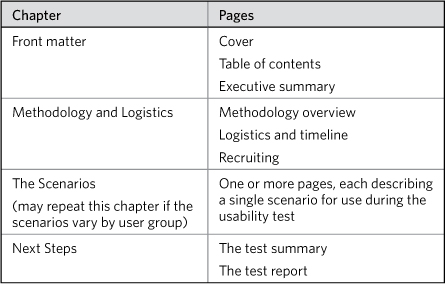

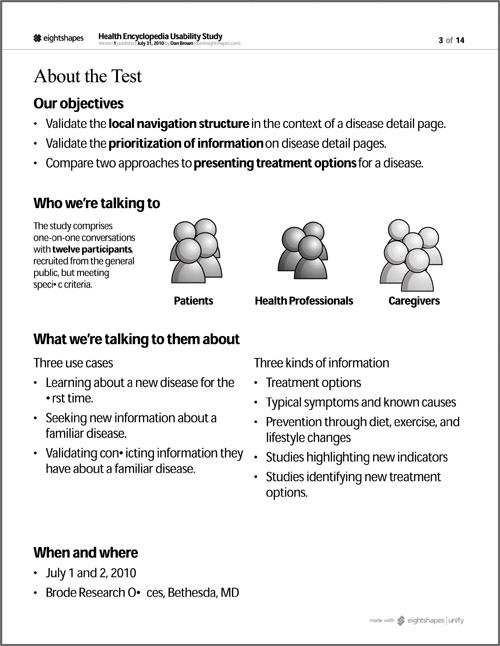

Figure 11.1. Summarizing the study on a single page answers the central questions about any usability test.

Figure 11.2. Summarizing participants with a picture, relating them to personas.

Figure 11.3. Listing scenarios describes the content of the test. In some cases, you might have to generalize or consolidate scenarios.

Who are you talking to?

The summary should describe the participants in the study. A simple sentence suffices for the summary page:

We will talk to nine people: three caregivers, three patients, and three doctors.

You can use personas, if you have them, describing the range of recruits according to which profile or behavioral dimensions they conform to.

Providing a rationale for your focus may require too much space on a summary page. If recruiting, or the makeup of your participant pool is a hot topic, consider giving it a page unto itself.

What are you asking them?

Frankly, if you can’t put a list of scenarios, generalized across user groups, on your summary page, your test might be too long. A list of scenarios best conveys the content of the test.

What are you looking for?

By basing test objectives on user motivations, you get objectives that look more like these examples for a fictitious health encyclopedia:

• To determine whether people who come to the site with a specific illness in mind are satisfied with how they located relevant information.

• To determine whether people who are doing research on behalf of another person can easily refer someone else to the information they find.

• To understand how people who have just been diagnosed with an illness want to revisit their research.

These objectives may be used together—nothing says your test must have only one objective. Once you’ve written up a couple, you can do a quick litmus test. If you state the objective in the form of a question, is it an answerable question? To take the first (bad) example, the test designers could ask themselves, “Is this site easy to use?” which would not be an easy question to answer. On the other hand, you could probably easily answer the question, “Are people who have a specific illness in mind satisfied with how they found relevant information?”

Scenarios

Scenarios make up the bread and butter of a usability plan. They describe what imaginary situations participants face, setting up a task or a challenge for them to address using the version of the site you put in front of them. (I dunno, stating it like that makes it seem kinda mean.)

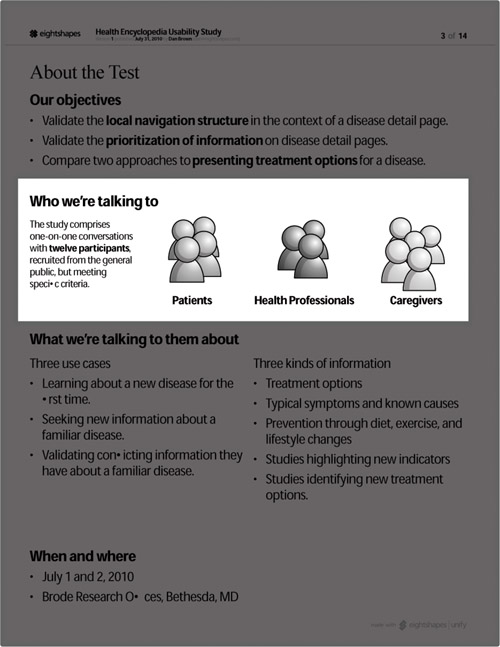

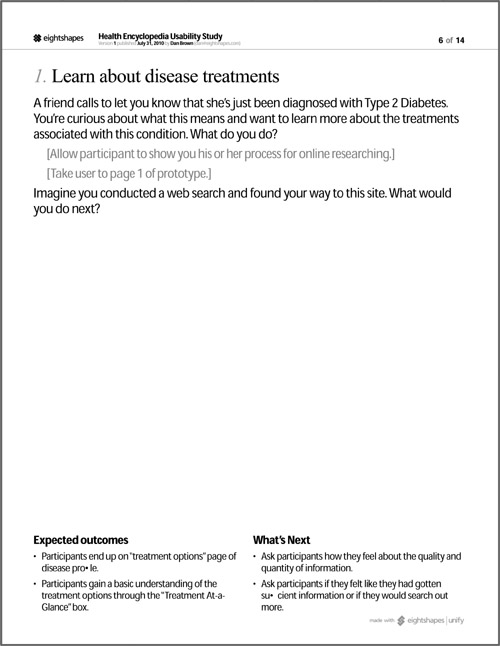

From the plan’s perspective, a bare-bones scenario consists of:

• Setup: The part you read to the participant so they understand the situation.

• Expected results: The description of how to deal with the situation correctly.

• What’s next: The transition to where users go next.

When describing a scenario in a test plan, typography is more important than pictures. (Showing screens of what participants should be doing can be helpful, but the plan has a more important function.) With typography, you can distinguish the three parts listed earlier.

Putting scenarios on separate pages can help the moderator pace the test and provide a simple bookkeeping system for tracking each scenario.

Figure 11.4. The usability scenario description page may have a lot of white space, but giving one page to each scenario helps the moderator pace the test.

Questions

You can incorporate specific, targeted questions about the scenario into the scenario description. With explicit questions, the plan starts to look even more like a script, giving the moderator very specific things to say at specific times during the session. Somewhere in between “strict questions” and “no questions” is “questions as conversation guide.” I like having them in front of me.

Figure 11.5. Adding questions to the usability page gives moderators a clear set of topics to ask about each scenario.

Scenario variations

The structure of the test might require varying the scenarios. (There can be any number of reasons to do this, but the most common is that the scenario changes for different types of users.) The structure of your plan should reflect the nature of those variations.

If only one or two scenarios vary, you can create a separate page for each variation and place it immediately following the main scenario.

If the majority of scenarios vary, you should give them their own chapter. This is clean if each chapter reflects a particular user group, for example. If user groups share some scenarios, you may want to repeat them in each chapter to make it easy to follow along the flow of the test. If you can get the common scenarios out of the way at the beginning or end of a test, you can dedicate a chapter to them before or after user-specific chapters.

I’d be hard-pressed to think of a reason to describe scenarios in an order other than the one you will use during the course of the test. Reviewing a test plan, the project team needs to understand how the test sessions themselves will run.

Pre-test and Post-test Questions

Some usability testing methodologies suggest that you ask users questions before and after the actual test. At the beginning of the test, before showing participants any screens or prototypes, you can establish their expectations with respect to the web site and their general level of experience. You can clarify their motivations for using your site and flesh out your profile of them.

The format for these questions will vary depending on your methodology. Some usability testers ask the questions of the user in person, recording their answers by hand. Others give participants a questionnaire that they can answer separately. (This approach allows the facilitator to spend more time focusing on the usability scenarios.) If you decide to let participants answer the questions on their own, you may want to separate these questions into a different document because it will be something you hand to them. In that case, you may need to format it differently, and—if the participant is to answer the questions without intervention from the facilitator—you’ll certainly want to be explicit about directions.

Pre-test questions can help you get a handle on the user’s expectations and experience before seeing the site for the first time. Questions after the test can gauge overall impressions and allow general pain points and suggestions to surface. Formatting for pre-test and post-test questions can include open-ended questions and questions with predefined responses.

Examples of open-ended questions:

• We’re developing a new system to support your sales process. What are the most challenging tasks in the sales process for you?

• The web site we’re building is called FluffyPuppies.com. What do you think you’d find on this web site?

• What system do you currently use to manage your family videos?

Examples of questions with predefined responses:

• On a scale of 1 to 7, where 1 is strongly disagree and 7 is strongly agree, how would you rate this statement: I found the web site easy to use.

• What level of Internet user are you? Beginner, intermediate, advanced?

• How long have you been involved with the sales process?

Regardless of how you deliver the questions to the participants, you should write the questions exactly as you intend to ask them. This can help ensure that you ask the question the same way every time.

Test Logistics and Recruiting

Good plans incorporate logistics and scheduling. Whether you need to state these explicitly in the document is up to you. Summarizing the when-where-and-how of the test on a single page might comfort stakeholders to know that you’ve taken care of all those things, even if they don’t see all the little details. You can also use this section to remind the project team of the timeline. Generally they want to know when they will see results.

Figure 11.6. Varying the structure of the usability plan to reflect the structure of the test.

Figure 11.7. Test logistics summarized as a simple when and where can satisfy even the most curious project team.

Detailed Recruiting Information and Screener

Although the logistics information from earlier elements should reference the kinds of people you’d like to recruit, you can always provide more detail. The more complex the test, the more detail you’ll want to give because you may be testing different user groups. Defining profiles for each group allows you to design an appropriate test for each group. If you’ve done user personas (described in the previous chapter) you can draw straight from those. Here’s a user profile for a parenting web site:

The Day Job Parent needs to work outside the home. He sees his kids in the morning, maybe drops them off at the bus stop, and then sees them again in the evening, when he comes home from work. The Day Job Parent may steal a glance at this web site during a lunch break, seeking advice on specific child-rearing problems. He may spend a bit more time on the site in the evening after the kids are in bed. In the Day Job Parent’s house, both spouses work. They are both familiar with Internet technologies, and have shopped and researched health issues online.

High-level descriptions of users are one thing, but actually finding people who meet those criteria is another. If you don’t have immediate access to people from the target audience, you need to put together a screener. A screener is a set of questions to ask potential participants to see if they fit your needs for the usability test.

You can embed the screener right in the plan, to show the project team what tools you will use to recruit participants. If you don’t have a screener at the time you share the plan, insert a summary describing the kinds of criteria you’ll use. Such a starting point gives the project team something to react to, and the recruiting agency a sense of what you’re looking for.

Some screener questions are pretty straightforward, asking about experience with using the Internet or shopping online. Some will be more specific to your particular application. For example, for the fictitious online health encyclopedia, your screener might seek out people who have recently been to the doctor, or who visited a health site in the last three months. Such questions will help narrow the pool of applicants to those who best match your target user group.

Usually, recruiting from the general public is done by a recruiting agency. They have a large list of people to draw from. If you’re using an outside agency, you’ll need to provide as much detail in the screener as possible. Indicate when a particular answer to a question should eliminate a potential participant. Indicate quotas for particular answers. You may want, for example, half your participants to have visited a health site in the last three months, and half to never have visited a health site.

The Best-Laid Plans

Gauging the usability of our design work is a method and process undergoing change. Whereas lab-based testing (recruiting participants, bringing them to a lab, watching them use the product) has been entrenched for years, it is being supplemented with other forms of testing. Technologies allow designers to watch people use web sites in real-time, gathering data as it happens. We can recruit people from across the globe, ask them to participate in an unmoderated usability study, and aggregate data across multiple sessions—all from the comfort of our desk.

These capabilities have raised some worthwhile and difficult questions in the design community. How much should design decisions be informed by data? Must we respond to every usability problem surfaced? How much feedback is too much feedback? For years we sought to be closer to the target audience, to be able to watch them in real-time and solicit input. Now that such a technique is a reality, we wonder if we should be careful about what we wish for.

To me, these controversies point to more careful planning, an increasing need to establish the parameters for studying users relative to the product. A document answering some basic questions provides, in a sense, a contract. Everyone on the project should have a shared understanding of what kind of information will come out of a usability study and how that information will be used.

A good usability plan does more than just incorporate some clever scriptwriting and scenario-building. It establishes a role for the usability test in the design process. It moderates the voice of the user, couching it in a context that helps the project team interpret the results. It positions that input among the countless others the design team needs to accommodate.

In assembling a usability plan, it can become easy to get lost in planning logistics, writing scenarios, composing interview questions. There is a lot to think about. In light of all of it, keeping things focused on the ultimate objectives is hard. And I’m not talking about the objectives for the test (though that’s pretty hard, too). The ultimate objective is informing the design process. A good usability plan must imply, if not articulate explicitly, how the design team will incorporate the results; and how they will integrate it into the landscape of the project, treating it not as a stand-alone activity, but as a constant source of feedback and inspiration.